go to UOP homepage

go to UOP homepage

go to UOP homepage

go to UOP homepage

© Copyright 2012–2025

© Copyright 2012–2025

This is a set of notes on tips, tricks and preferred practices, referencing specific features of the Python language. Argument CheckingOne of the double-edged swords of Python is “duck” typing; if the value of some variable walks like a duck and talks like a duck, it is taken for granted that the object that variable references is a duck. In other words, there is no explicit type checking, if an object has all the member fields expected of it (including member functions), and these are themselves assigned to (or return) values that behave consistently with the expected types, then the code simply proceeds. This can be very powerful. For example, if you write a piece of code with real floating-point numbers in mind, but none of the logic is any different if all the real numbers were replaced by complex numbers, then you can simply use that same piece of code for both. Of course, you should check, verify and document that the code is more general, but, from then on, there is less to maintain, and therefore less to mess up. C++ puts you through h*** and back to achieve such a level of abstraction, even just for a simple generalization, and then you have to choose between base-classing, templating, or some mixture of both; may the good Lord help you if you generalize once, but not enough; you will do it all again. On the other hand, since Python is not checking that you are doing what you think you are doing, some bits of code might run and give surprising answers, perhaps because, in the complex variant of some numerical code, you should multiply some number by its own complex conjugate at some point, but, since you were thinking of real numbers you just multiplied it by itself, which is syntactically legal and logically wrong. Then, if you were accidently give this code some complex input (or just assume it should work), then Python will quietly give you the wrong answer (well, you did it, but Python was your enabler). This is the worst kind of bug. Generally people would prefer their code crash, rather than do something unreliable and not even tell them. Imagine how many calculations you might do with faulty code, wasting your time and computer time. Also, such bugs are harder to find. It's pretty easy to find where a piece of code crashes; Python informs you of this by default.

Here is my recommendation on getting the best of both worlds.

This will not be practical everywhere, but you might have the forethought to use it when you smell that there might

be some danger in passing in an incompatible type that might act the same most of the time (a goose, where a duck is expected).

Of course, you could just use Python's assert right out of the box.

What I am proposing is one level more refined.

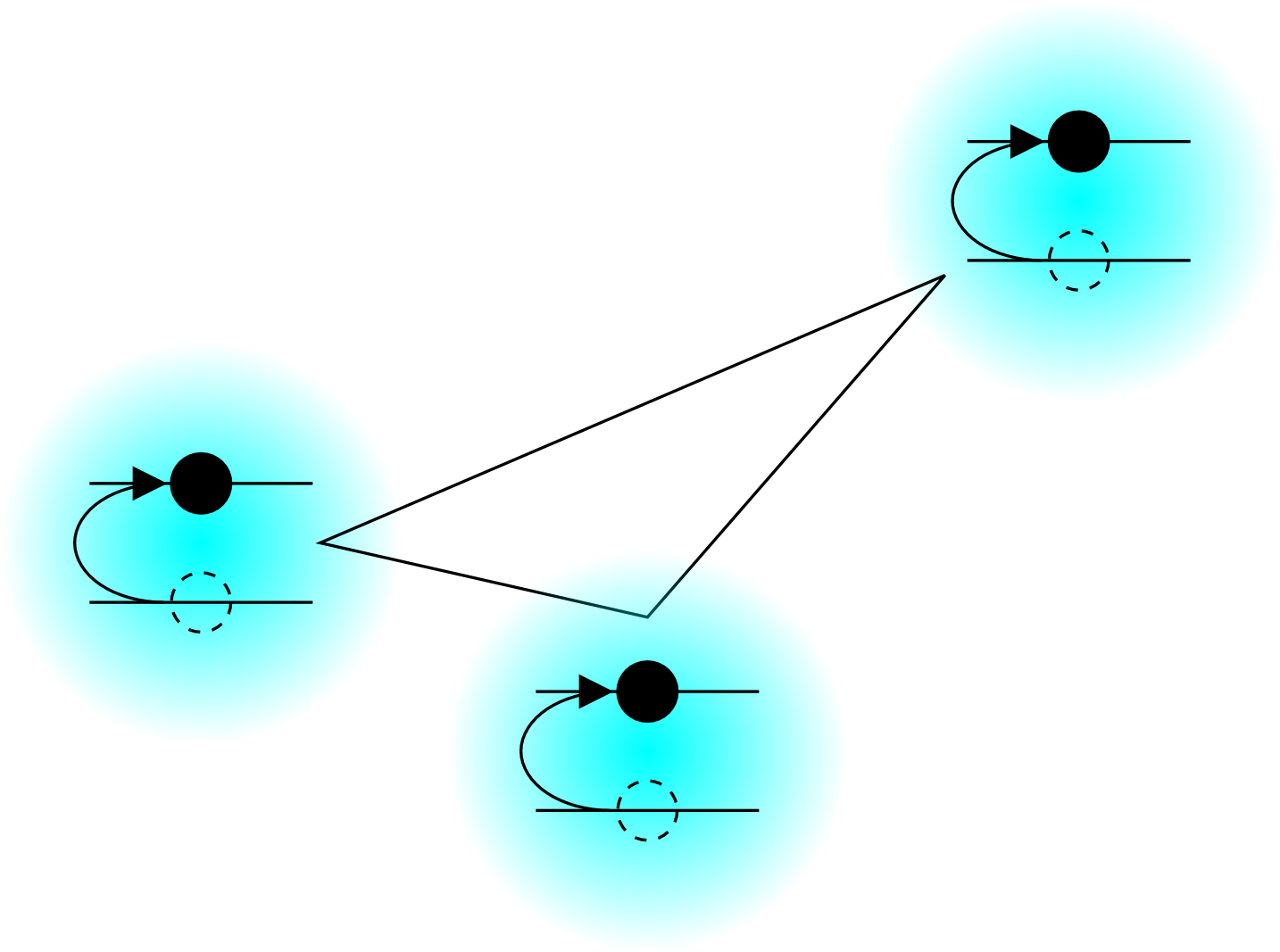

Say your code expects some arguments, v1, v2, etc., I like to start it with

a call to a function that pre-parses the arguments (those that I am worried about anyway ... overkill destroys

the human productivity gain you get with Python).

Now, what happens at each level, where only a single tuple of the form (<parse>,v) is handled? It simply returns <parse>(v), appended to the list of such returns from below. The variable <parse> is something that you specify according to the local need, and the only restriction is that it is callable with one argument. It can be a one-line function written only for this purpose that contains only an assert statement. It could be a built-in data type like float, which would up-convert and integer (and then you can write your remaining code with confidence that the variable is a float, which has consequences for division in Python 2.x), knowing that anything that converts to a float error and warning free will be silently accepted ... Python will complain if you give it a complex number for this. (If more than one argument would be needed, for perhaps dimension checking, then pack the other information that is to be passed along with the parsed object into a tuple or some wrapping class; the return value should still be as described though.) Finally, my favorite is to write specialized parsers which are now modular and separate from the rest of the function that does the proper logical work. I usually start with an assert statement that only lets through those data types I am comfortable accepting at the moment, then I handle each one individually. In most of my applications, each of these various types has a well defined conversion to some specific type, which my code can then rightfully assume it is working with. Often this involves converting a simple string to a structured object, of which, a string is the most likely variable piece, and defaults are safe assumptions if the user does not want to be weighed down by specifying more (or wants to roll with whatever defaults the latest and greatest version of my code uses). Perhaps the second most likely thing to vary in the full-fledged structure has an integer value, then the parser may accept an integer, or a tuple with a string and an iteger [like ('light-blue',3) for a light blue line with weight 3, to be specific]. The possibilities are endless, but the point is that, in a single line, you can move all of your argument processing and checking elsewhere. Then you can just have a comment as to your expectations when that processing is done. |