go to UOP homepage

go to UOP homepage

go to UOP homepage

go to UOP homepage

© Copyright 2012–2025

© Copyright 2012–2025

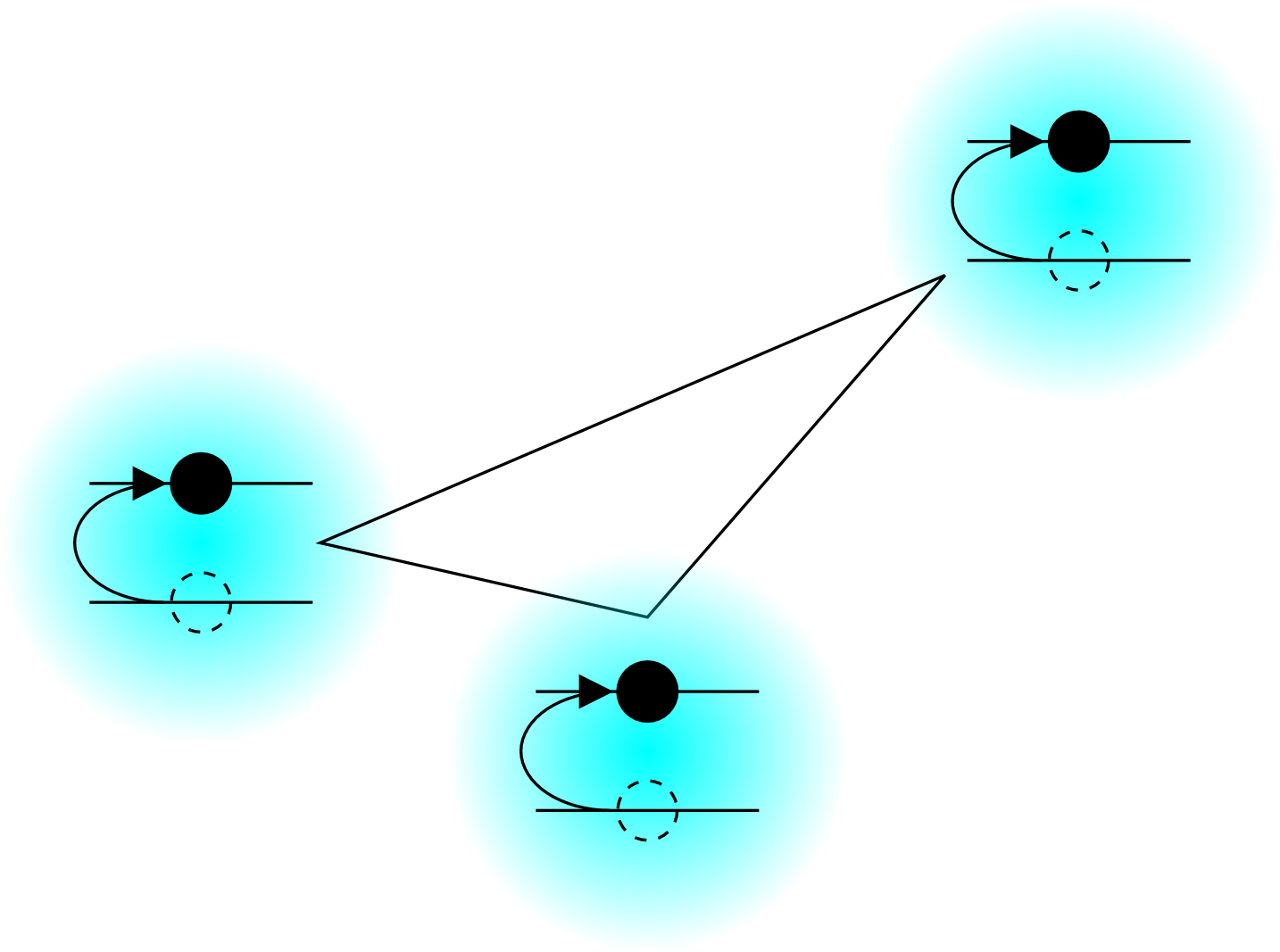

Implementation Philosophy In parallel computing you are looking to accomplish a speed-up of your program by doing multiple associated computations simultaneously (i.e., in parallel). Implementation of any kind of parallelism should be a local decision. No universal model should be thrust down from above. This is partly to liberate the individuals in the group, but it is also to account for the fact that our code will embed, and be embedded in, third-party tools, which have their own parallel models. That said, the best way to bring a maximally productive, and minimally invasive, level of order is for local pieces of code (functions?) to be informed of the resources available to them; i.e., is a given function allowed to run on multiple cores, and, if so, how many, and on which machines? If it is to be restricted at all, how much memory and disk is it allowed to use? Then, such a function can, in turn, partition the resources given to it as it sees fit. For a large body of code that may repeatedly subdivide total allocated resources, I recommend implementing (or borrowing) some sort of structure or class that recursively communicates this downward in a uniform matter (which can of course be hashed and pressed into the form expected by some third-party tool). Here is a page with an list of many possible packages (python libraries) for implementing parallelism, depending on the circumstances (task and hardware, including some fine-graining of the notions of SMP and distributed models discussed more generally below). Background: Threads and ProcessesAt any time, any computer is always apparently doing more than one thing; it can be playing music, have browser windows open, run office applications, and, in our group, be crunching numbers (some of our computers are reserved for only that purpose). This is in addition to all the maintenence utilities an OS is running all the time, just to stay alive. Any given CPU core, however, is only doing one thing at a time. If the machine has only one core (common until just recently), it is actually switching back and forth between all the different running tasks so quickly that you often cannot even tell; consider that CPU speeds are measured in GHz these days. The OS divides all the running tasks into processes, and processes may further subdivide themselves into multiple relatively independent sequences of operations called threads. Individual cores of a machine can be thought of as working on only a single thread at any time, switching between different threads of different processes as needed by the user, and as directed by the OS. If there are multiple processor cores, then multiple processes or multiple threads of the same process can literally be running simultaneously (rather than just appearing as such). Either concurrent processes or threads (or both) can be used as logical workers for implementing computational parallelism. In terms of the distinction between processes and threads, one might wonder at this point if the notion of a processes is simply a logical grouping of related threads, but it is more substantial than that. Foremostly, different processes run in different memory spaces. This is enforced at the level of the OS (at the level of the OS kernel even), and it means that one process cannot access the memory that the OS has allocated to other processes; otherwise buggy MS Office applications would routinely overwrite the memory storing the pages open in your browser, messing up your webmail. For related reasons, if a process crashes, other applications and the OS are usually unaffected (the user, however, may be pretty ticked off). Threads, on the other hand, all belong to the same process, and they can all see the exact same resources, including memory and open file handles. Multiple threads are spawned on the inside of a process, and it is the job of the programmer who requested their creation to keep them working together harmoniously, not messing up what each other is doing. The OS is informed of the existence of the different threads, as it is the OS that schedules the CPU resources to run all the threads of all the processes, but it is less concerned with what they are doing (as long as they don't make illegal requests for resources). The other major distinction between processes and threads is scheduling. Since processes are guaranteed to be completely independent* during the time that they are alive, only the OS kernel needs to be involved in their scheduling. This is true even if a user will later integrate the results of many processes into a larger whole. Though this scheduling may involve honoring requests by a process to run multiple threads, the OS is does not need to concern itself with the logical relationships between different threads of the same process, since that is the programmers responsibility. The kernel is a compact and efficient piece of software that is pretty much guaranteed to get the most out of all your cores.** For threads, however, once you spawn and dispatch them inside of the process created for your running software, with the intention of them being concurrent, they must first be passed through a scheduler associated with the software library that you used to create them, called a threading model, and only then does that software make the primitive requests to the kernel to have them run. This extra software layer is needed to make sure you get the expected result from your delicate managing of many tasks that all have access to the same data space, which you performed using the utilities provided by the threading model. The need for this scheduler means that performance can depend on the threading model you choose. For example, the threading module in python is awful for number crunching (read on). A note is in order about the relationship the total number of threads to total cores on one machine, which also applies to threads created by running many single-threaded processes. You can make as many threads or processes as you like, but, quite often, for computational tasks that are not waiting for any external events in order to keep crunching numbers, each thread will expect to have a core of its own to execute on. If you only make a few more total threads than you have cores, you will likely not see much of a slow-down or speed-up (could go either way, depending on subtle things). The threads will just take turns running on the physical cores, stopping intermittently when there is no core available to run on. However, if you make many more threads than you have cores available, then you will definitely see a slow down as OS and threading-model schedulers start to thrash around, constantly switching tasks in order to keep no one waiting too long (they don't question why any certain thread wants to run). Eventually the machine will grind to a halt, making it hard to even log in. Don't do this.

*

The exception is the disk. It can and does happen that multiple processes may try to access the same file on the disk.

Though things are getting better these days with improved file systems and software layers that put locks on files,

this is ultimately unavoidable because the disk is not a temporary work space the same way memory is,

and so it cannot be partitioned strictly by process;

every process has (roughly) equal right to the files on the disk.

Furthermore, the disk is too slow to catch and respond to multiple processes accessing the same file.

If you want to practice corrupting a file, open an .rtf file in both a simple editor and MS Word at the same time.

Some OSes will let you do this.

The words “machine” and “computer” are vague, but it is good to know that the former usually refers to the contents of a single, self-contained box of hardware, whereas the latter often can refer to a collection of machines, such as in the word “super-computer”. If we need to be precise, the word “node” will be used for individual machine in a super-computer or computer cluster. This word usually implies that it is part of a larger computational infrastructure of some sort. Nodes contain CPU chips, each of which may contain multiple execution cores, CPU cores being the logical workers that execute the threads discussed above. It is not usually of much interest how the cores are divided among CPUs in a node; what is most important (regarding parallelism) is the total number of cores on a given node. Recently, the idea, mentioned above, of each core only working on one thread at a time is starting to get blurry with Intel's introduction of hyperthreading, in which an expanded core can keep track of the state of two threads at the same time (presumably more in the future). However, these threads share a lot of the core's execution resources, and this is really only valuable if some external latency causes threads to stall and restart a lot, such that effectively one core can keep up with two stop-and-go threads, but without having two switch threads as often. In practice, for raw number crunching, I have seen negligible effect of having hyperthreading available; they do not act as even fractional additional cores. Symmetric Multiprocessing (SMP) vs. Distributed ComputingPractically speaking, the two most broad classes of parallel computing are those that use more than one core on the same machine and those that access resources on multiple machines. The definitions of “same” and “different” can be vague, but, if two cores have direct access the same memory, we will use that as a practical test for being on the same node (not saying that this is necessarily allowed by the OS). If you think about it, there is nothing to keep you from treating cores that live on the same node as if they were on different nodes. Recall from above that different processes on the same machine have unrelated memory spaces. Aside from practical considerations (communication time, partitioning of finite resources), they are as separate as if they were on different machines.* The converse is not really true because cores on different machines cannot work directly on the same chunk of memory.** For this reason, distributed computing (parallelism that uses multiple nodes, say in a cluster or super-computer) must be process-based, dividing what are likely going to be physically separated tasks into logically separate processes, whose results are harvested by a parent process, integrated into a whole and perhaps communicated at predefined points through predefined channels to other processes (which may be idle until the promised data is received ... these restarted processes can logically be thought of as brand new processes, but it is usually more efficient to continue old ones from their current state). Parallelism on the same node may be thread-based or process-based. Thread-based parallelism is often called symmetric multiprocessing (SMP) because each thread in a single process has the same, i.e., symmetric,*** access to the all the data in memory for that process. A non-trivial aspect of all this though is the of the programmer. Process-based parallelism can scale to more total cores by using many thousands of machines, if the communication latency does not kill the specific application. There are also advantages in terms of preventing bugs by making the programmer be explicit about communication, but actually getting good performance demands a lot of thought about what will be communicated and how. This planning must be done in advance of writing even hardly a single line of code, since different ways of subdividing the overall job can demand substantially different code, and one is not exempt from this forethought even if the target hardware is, in fact, a single machine (though less communication latency within one machine might make your performance less sensitive to strategic blunders). Thread-based parallelism can often be implemented in minutes as an after-thought to speed up a single time-intense loop, by breaking it up over many cores, for example. However, it is not scalable to any more cores than you have on the biggest single machine at your disposal, and if the task to be broken up is not trivial, it can be easy to introduce bugs by not properly coordinating reads and writes to the same location in memory. It should be emphasized that, there is nothing to prevent a programmer from dividing one a process on one node into threads, even if a parallel program is already running many such processes. A common “mixed-mode” of parallel programming is to set up one process per node and then divide this task into one thread per core on that node. Mixed-mode parallelism can be very effective, but it is the least logically symmetric. Whereas it would be a bad idea to not even think about what tasks are most closely related to each other in general distributed computing, and ensure that they run on the same node, in mixed-mode parallelism, wholly different programming models are chosen for on-node and across-node parallelism, even if all the tasks are equivalent and logically separate. This is one of the reasons that I think decisions about parallelism are best made locally and not enforced globally; each function can choose how to best partition the physical resources available to it, based also on the parallel capabilities of the functions it calls, making the form of overall parallelism maximally dynamic, and hopefully optimal (or, at least, optimizable). Per the above discussion, the remainder of this page is divided into discussions of thread-based parallelism and process-based parallelism, with these logical paradigms being most cleanly separable. These two parallel models can can be layered one on top of the other (in multiple layers) to achieve most efficient performance depending on the hardware conditions, such as clusters, grids, clouds or crowd-sourcing.

*

Again, with the exception of access to permanent files on disk.

Thread-based parallelism is a rather short discussion. Since we are limited to one machine, and since all threads are inherently equivalent (though they may be requested to do different things), a lot of major variables and decisions relevant to process-based parallelism are removed in thread-based parallelism. Simplicity is great most of the time, but simplicity and power are also often opposed. For C or C++, I recommend OpenMP. In the simplest case, a compiler directive in front of a loop, along with a compiler flag (and linking against the relevant library) is sufficient to get the job done. [give simple examples of directive and compiler command and brief explanation] Threading is one of a few places where python does not just have drawbacks, but it flatly fails to provide any worthwhile functionality for us. I was surprised to learn this, but, as I understand, the weakness is a fundamental one. At the core of the python experience is the fact that it is constantly doing a lot for you, garbage collection, reference counting, bounds checking, etc.. As best I understand, it is almost impossible to get all that fancy stuff synchronized if there is more than one thread running under the same python interpreter. So python has something literally called the global interpreter lock (GIL) which prevents the very concurrency we would be looking for by using the standard threading library. The scheduler of python's threading model only runs one thread as a time, even if more than one thread exists.* This might seem completely useless to people focused on number crunching, but remember there are others out there with different needs. If your threads will spend a lot of time waiting on data from elsewhere (perhaps accessing things over a network), then it helps to have many threads doing many such stop-and-go tasks, being cycled amongst by the scheduler, albeit, one at a time (one thread does some processing while another waits for data). For us, the threading module is indeed useless. That said, python has an amazingly easy interface to process-based parallelism on a single machine, available in the multiprocessing module discussed below, which is even modeled on the interface to threading. The documentation is pretty honest about the fact that this module exists in order to get around the GIL. * See also the discussion on this page, especially this link that is found therein. Process-based parallelismOn a Single Machine The de facto standard for process-based parallelism using C, C++ or Fortran is the message passing interface (MPI). This is the case whether one is targeting a single machine or many nodes, and so it will be discussed more under distributed computation, below. It is worth mentioning now already that MPI works with python as well, but if you are targeting a single machine, there is an easier way.

On a single machine, process-based parallelism can be implemented easily in python using the multiprocessing module.

The absolute simplest case is use of the class multiprocessing.Pool, looking something like this:

Distributed Computation [Coming soon, a discussion of MPI for C/C++ and python.] |